In this article I’m analysing the full text of J. R. R. Tolkien book - The Hobbit Or There And Back Again. My main focus is on extracting information regarding book sentiment as well as getting comfortable with one of NLP’s techniques - text chunking. We’re going to answer who are the top characters of the book, how the sentiment changes with each chapter as well as - which dwarf pair has the most occurrences together!

Exploratory Analysis[]

A quick overall look at the data is always due. It helps to get to know what we’re dealing with, verify data quality, but also allows for some initial insights. By tokenizing sentences I got their total number to be 4915. They are split into 18 chapters.

Sentence count per chapter varies greatly. The shortest chapter being 150 sentences long, the longest - more than triple that. We can see that “plot thickens” gradually, when chapters get longer from the third to the tenth. Then with two sharp changes, their length decreases towards the end. Looking at sentence length there’s much more stability. The biggest difference between the lowest average and highest average sentence length is around 30% (chapters with shortest sentences averaging around 45 and longest around 60 words). If we look closely though, the slight differences show a similar pattern as chapter length - the longest sentences are in chapter eighth to tenth. The shortest - third, eleventh, fourteenth and the last one. I did a small research on sentence lengths in prose, and it is recommended (e.g. here) that the average length should be between 15-20 words. If we treat this as a reference, Tolkien’s style of writing stands out as visibly different.

Sentiment Analysis[]

I started with getting a sentiment score for each of the sentences in the text. For this purpose I used an approach called VADER, that’s available in nltk.sentiment package. By calling polarity_scores on SentimentIntensityAnalyzer we get 4 scores: compound, pos, neg and neu. Compound score is a unidimensional, normalised sentence score based on ratings of the words in lexicon. It takes value from -1 to 1. By the standard, a sentence is considered a neutral one with a compound score between -0.05 and 0.05 - all that’s above is positive, all that’s below is negative.

Pos, neg and neu are proportions of text that can be assigned into each category and sum to 1.

For the purpose of this analysis I will use compound score, but before an analysis - let’s see how that works on Hobbit text (the four numbers are respectively: compound, pos, neg and neu scores):

I found the following scoring funny (compound score = -0.296).

I totally support it, everyone should always have a choice.

But on the other hand it shows flaws of this approach. It seems that because this sentence is so short, it got heavily impacted by the presence of “no”, while the sentence itself is a completely neutral statement. Knowing that on average the sentences are at least 40 words long, I feel confident enough to move forward using this approach in the analysis.

Looking at the above plot, we can see that the novel starts with a positive sentiment, possibly an excitement for the starting journey. It then stays mostly within neutral range, reaching a negative score only once, just before ending again on a positive note.

Let’s take a more detailed look into each chapter and the percent of sentences by their sentiment. In general, positive sentences cover between 25% and 40%, and negative sentences around 30% of all sentences. Although there are no strong fluctuations in the ratio of each sentiment group by chapter, I found an interesting pattern in the percentage of positive sentences. With one exception, each increase is followed by a decrease in percent of positive sentences, and the other way around. Could it be teasing the reader with the ever changing atmosphere throughout the book? That’s a very long shot, but the plot tells a nice story.

Text Chunking[]

By definition, chunking takes multitoken sequences in order to segment and label them. The idea gets simple when shown on an example.

Named entities[]

In the first attempt we’ll extract named entities from the text in an automated way - I know the characters from the book and could list them, but it’s easy to miss something important. This task is made even simpler, as there is a nltk.ne_chunk() function serving this purpose provided in the library.

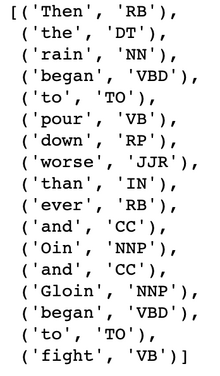

For the input we need to prepare a sentence where each word is turned to a tuple of a word and its part-of-speech tag, e.g.:

When feeding each word of the sentence to nltk.ne_chunk(), we get a nltk.Tree object as a result, which in a clear graphical way shows which part matches the chunk criteria. In this example that means being a NNP - singular proper noun, which is met by “Oin” and “Gloin”.

I found the nltk.ne_chunk to work with my data well enough. Additional work was needed though. Many common words were counted as named entities because of being written with capital letter e.g. “Hill”. My idea to limit the list was to remove all the chunks where word’s lemma written in lower case was included in corpora of English words.

For this purpose I used nltk.corpus.words.words(). It still can be a bit tricky and requires some domain knowledge (i.e. reading the book of course), as for example this method would exclude Bilbo from the named entities.

There were then only a few words that I decided to remove manually, e.g.: 'Tralalalally' and 'Blimey'. Resulting list of top 15 named entities (based on their occurrence count) from the whole book looks like below.

We can go a step further and analyse occurrences of top characters by the chapter in which they occurred the most. The three major characters - Bilbo, Thorin and Gandalf, to no surprise, had their presence throughout the whole story. Although Gandalf was definitely more present in the first chapters. Gollum stands out as a one-chapter character. Smaug on the other hand gets most attention towards the end of the book, still we can see that he’s been announced in the first chapters already.

We can also match the extracted named entities with sentiment scores of sentences from the previous analysis part and see whether some characters appeared in positive sentences more often than in negative ones.

Except for Elrond, the distribution of sentiment does not differ greatly between characters. From the previous plot we know that Elrond has a relatively small number of occurrences in the book, so his result might be skewed.

This does not necessarily say anything about character being bad or good as it turns out. And it also makes sense, as the sentiment score is being calculated both on the base on the things the character says himself, as well as when he is being described. A happy bad guy may not surface on such a plot. We do know though, that none of the Hobbit characters is put in good/bad surroundings only.

Relations between named entities[]

For learning purposes I wanted to define my own chunking rules. In the first attempt it was done automatically by the already existing function nltk.ne_chunks, but we can choose any set of part-of-speech tags in any configuration to be extracted from the text.

Following grammar consists of 4 NNPs - singular proper nouns. Two middle ones are mandatory, remaining two optional, which is indicated by "?". They are all joined by optional CC (Coordinating conjunction), TO, VBD (Verb, past tense), or IN (Preposition or subordinating conjunction).

My goal was to get any groups of entities connected by a verb like “talked to” as well as by a simple “and”. In practice it results in such 4 chunks extracted from a following sentence:

'Soon Dwalin lay by Balin, and Fili and Kili together, and Dori and Nori and Ori all in a heap, and Oin and Gloin and Bifur and Bofur and Bombur piled uncomfortably near the fire.'

“lay” is considered a NN in terms of part-of-speech, that’s why Dwalin was unfortunately left out by this logic. I counted how many each of these named entity chunks appear together.

We now know which dwarves pair had the most occurrences together - it was Kili and Fili and with no close runner-ups! We also see that there is only one group of dwarf and non-dwarf characters - Bilbo and Balin. There are also pairs of first and last name for the same character (e.g. Bilbo Baggins), this is because any words between nouns are optional in my definition above.

Summary[]

With a few tools we can get a fun insight about a whole book. Although they may not be very accurate - I think it’s very difficult to summarise art with data analysis - it’s a great way to learn new techniques.

Analysis: link

Author: Dorota Mierzwa